How to Manage the Risks with GPT-4 API and OpenAI Updates

How to Manage the Risks with GPT-4 API and OpenAI Updates

OpenAI has an entire page dedicated to API data privacy, wherein they promise that protecting valuable user data is “fundamental to our mission. We do not train our models on inputs and outputs through our API.”

However, that doesn’t mean that all the risks of privacy and other concerns magically disappear just because they’re acknowledged. In fact, that makes it even more important for you to understand what risks are present with the GPT-4 API and OpenAI updates that are being made as a result.

What is GPT-4?

OpenAI launched GPT-4 in March 2023, as the most powerful of its kind. The large language model (LLM) is the successor to GPT-3, the original power behind ChatGPT. When the new LLM made its debut, it came with an impressive 98-page report that included all kinds of impressive accomplishments. According to this report, GPT-4 has the capability to:

- Pass major exams like the bar, LSAT, and others (with impressive scores, no less)

- Write coherent books

- Discover pharmaceutical drugs

- Create video games

- Turn a napkin sketch into a full website

- Generate an entire lawsuit with a single click

What makes GPT-4 different is that it has multimodal capabilities. That means it can use text and image input to generate text-based output.

Users can upload a photo that the LLM can respond to or include a photo as part of their input along with text, giving it more capabilities and potentially, more risk. An API release slated will allow GPT-4 to be incorporated into software and apps for streamlined automation that has never been seen before.

The report includes a section of its own on the “Potential for Risky Emergent Behaviors” and cites an instance where the bot was able to use text messaging to convince a human user to solve a CAPTCHA for it. Plus, it still hallucinates (makes errors and mistakes) and faces several of the biases of its predecessors.

What are the risks of the GPT-4 API?

Even though OpenAI has made earnest attempts to improve GPT-4 and address many previous concerns, there are still several potential risks present. When considering the use of this tool, consider the following:

Unethical behavior: Even though there has been extensive testing, there’s still a risk that certain answers to questions could be inappropriate or incorrect. Undesirable results are most anticipated when models interact with sensitive topics and discreet information.

Unpredictable errors: Machine learning doesn’t make error remediation easy. You may have a higher risk of faulty logic or unexpected results because you can’t avoid these errors proactively.

Data dependency: This tool relies entirely on machine learning and it takes a massive amount of data to train models. Thus, if the data quality is poor or there is incomplete data, the output can be poor, as well. Even GPT-4 only has limited knowledge from 2021 onward.

Misuse: Of course, with tools like this comes the increased risk of misuse of personal information or the alienation of data without consent or permission. When using tools like this, proper training can help prevent misuse.

OpenAI is engaging experts to help address these risk factors, but you also need to manage them for your business.

After all, OpenAI published this report for the sake of publicity, not public understanding. They even acknowledge that their report is lacking transparency, which they cite is due to “the competitive landscape and safety implications of large-scale models like GPT-4…”

So, with all that we do know, what don’t we know about GPT-4 and how it may or may not help business?

How can you manage risks and maximize AI?

As with any new technology tool, GPT-4 is a tool that should be used judiciously and for specific needs. Utilizing tools like ChatGPT and other AI for your business should be done in a supportive method, not as a replacement. Here’s an example based on ads that we’ve seen.

“Use AI to replace your content writers and save big!”

That’s not what ChatGPT and other AI is for. It’s nowhere near capable of matching the human writing style and no matter how well it does with grammar, it can’t understand the nuances of speech and emotion.

You can, however, use AI tools to help your writers create better content and deliver better outcomes as a result. You can use them to generate keywords, check for SEO best practices, and even come up with better words to replace your own (we’re looking at you, Grammarly).

Managing risks is all about understanding the limitations of AI and machine learning. Although they have come a long way, they are nowhere near perfect and certainly not infallible. As these tools become more commonplace, it’s likely that regulations will be considered that help govern their use, but until then, many businesses are left to figure out what is and isn’t “safe” on their own.

Finally, stay updated on the latest news and information related to GPT-4 and OpenAI, and when you’re choosing partners, make sure that you opt to work with those who are also on the forefront of the AI conversation. The best protection you can provide is education for yourself and your team. Pay attention to updates and new releases from OpenAI, as well as insights that come as the GPT-4 tool rolls out access to everyone.

Speaking of partners, ask how Smith.ai can help

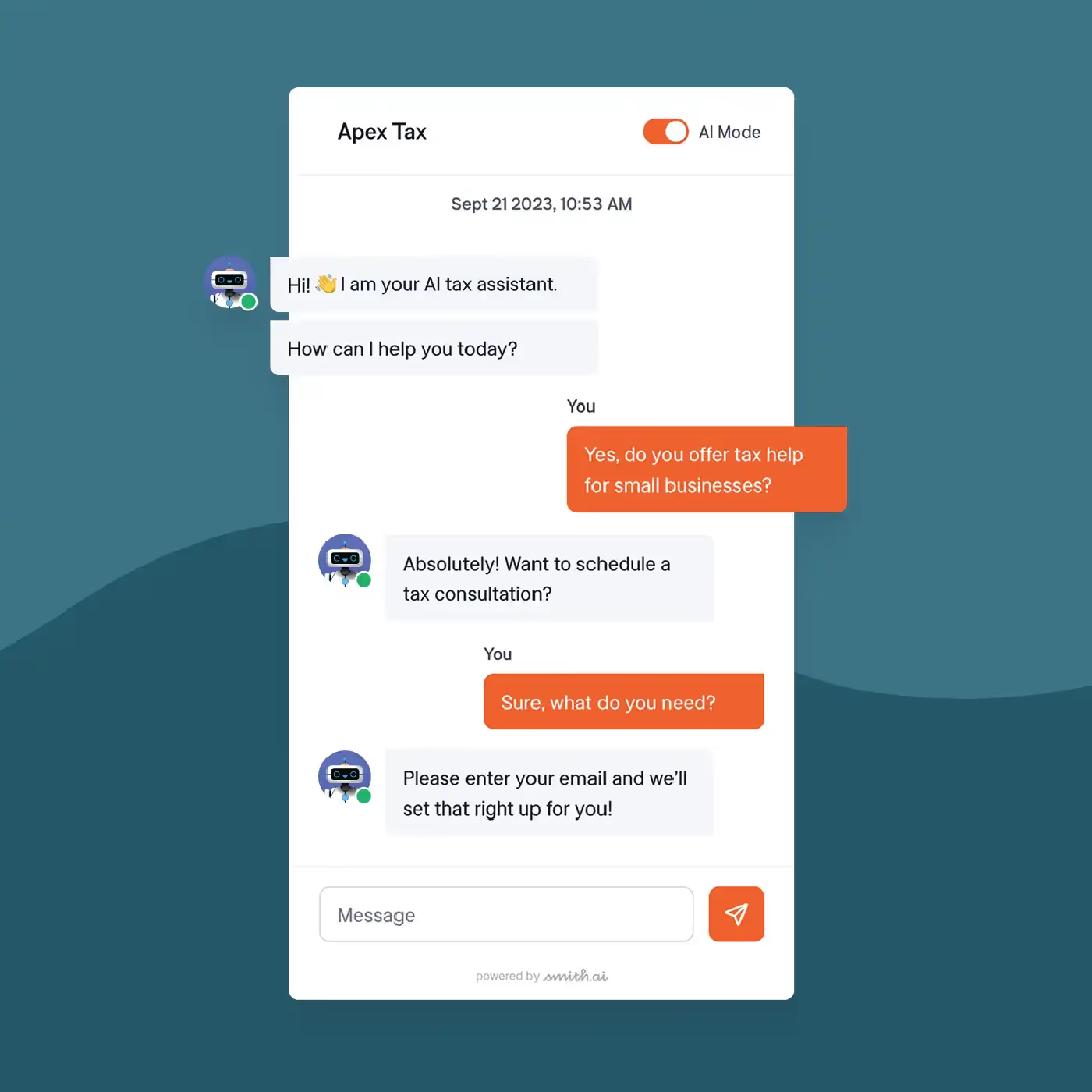

When it comes to managing risks and maximizing the benefits of your resources, no one understands you better than the virtual receptionists at Smith.ai. Not only do we keep up with the world of AI and machine learning, but we can also help you automate tasks and alleviate some of the work you do.

Our team of experts can act as your 24/7 answering service to ensure you never miss an opportunity, while also handling lead intake and appointment scheduling so your team can spend more time investing in new tools and training. Plus, we can even offer our own AI-based support through our own chat services.

To learn more, schedule a consultation or reach out to hello@smith.ai.

Take the faster path to growth. Get Smith.ai today.

Key Areas to Explore

Your submission has been received!

%20(1)%20(1).avif)

.svg)